Chevron Corporation’s (CVX), global refining system manufactures fuels and other products sold by the company’s marketing, lubricants, and supply and trading organizations under the Chevron®, Texaco® and Caltex® brands. These refining systems are capable of processing heavy crude oils and producing a variety of high-value products, including transportation fuels, chemicals, and lubricants. This value chain supports 2.4 million barrels/day worth of annual products sold by seven refineries across a network of 8,000 retail stations.

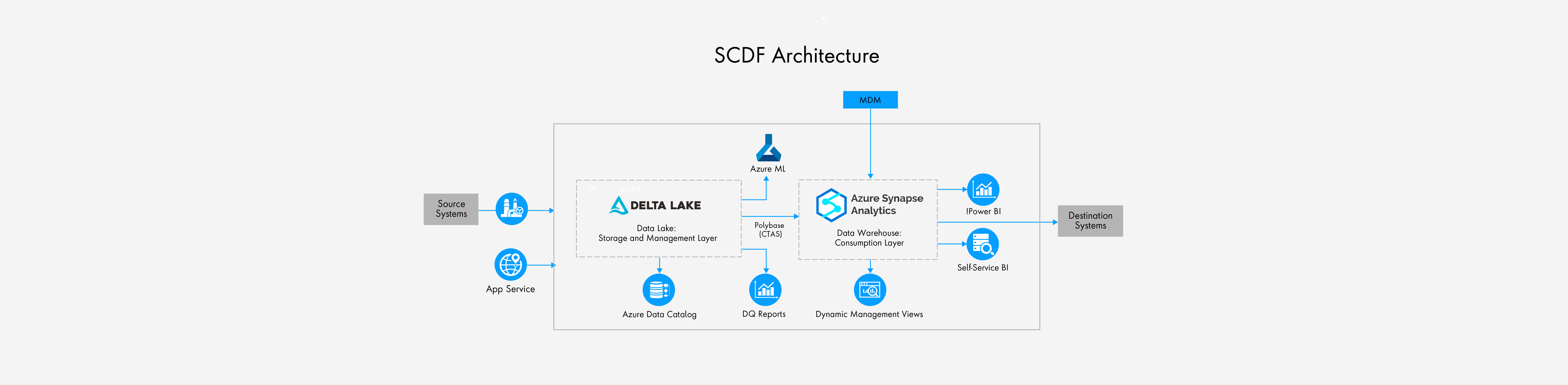

The Commodity Supply Chain Management (CSCM) Data and Insights team is responsible for managing more than 200 data pipelines to ingest data from various internal and external sources and standardize it in the Supply Chain Data Foundation (SCDF). SCDF is central to driving data integration and related initiatives across the many functions responsible for managing the flow of crude oil and refined products, including:

- replenishment planning and scheduling

- inventory management

- price and demand forecasting

- contract planning

- product quality and blending

- margin analysis

- common master data